How we got DAGs working across multiple Lakehouses in Fabric

What we learned from using DAGs in a multi-lakehouse notebook setup

Have you ever used a DAG (Directed Acyclic Graph) in your Microsoft Fabric notebooks?

If not — you should. DAGs are a powerful way to orchestrate notebooks, especially if you’re building modular pipelines or prefer a notebook-first, pro-code approach over the built-in Data Pipeline tool.

With DAGs, you can:

Combine multiple notebooks into one flow

Set clear dependencies between them (e.g., Notebook C runs only after A and B finish)

Pass parameters between notebooks.

If you prefer working in code and already have your logic split into notebooks, this solution has you covered.

You can watch this video from Guy in a Cube or read this article from Fabric Guru for a solid introduction.

🔁 DAG + Move data between multiple lakehouses

In a recent project, we orchestrated multiple notebooks across several Lakehouses. Each notebook was part of a Medallion architecture, moving data from bronze ➡ silver ➡ gold layers.

Here’s a simple code snippet to orchestrate this workflow. As you can see, I use the dependencies parameter to define the desired execution order of the notebooks:

dag = {

"activities": [

{

"name": "Bronze to silver",

"path": "Bronze to silver",

"timeoutPerCellInSeconds": 900,

},

{

"name": "Bronze to silver HR data loader",

"path": "Bronze to silver HR data loader",

"dependencies": ["Bronze to silver"],

"timeoutPerCellInSeconds": 900

},

{

"name": "Silver to gold - Legacy report data",

"path": "Silver to gold - Legacy report data",

"dependencies": [

"Bronze to silver HR data loader"

]

}

],

"timeoutInSeconds": 3600,

"concurrency": 2

}

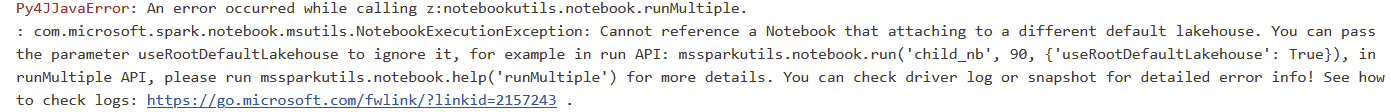

notebookutils.notebook.runMultiple(dag, {"displayDAGViaGraphviz": True})However, when you try to run this DAG, you’ll likely encounter an error related to the default Lakehouse context. This happens because the DAG orchestration engine doesn’t know which Lakehouse to use unless it’s explicitly referenced in the notebook.

🛠️ How to Fix

To overcome this issue you’ll need to add two things into your code:

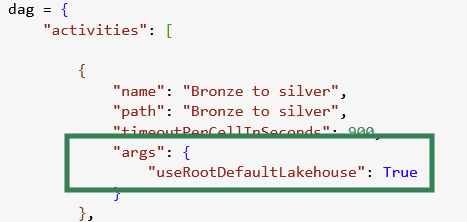

add this argument to your code: "useRootDefaultLakehouse": True

Use absolute paths in all of your notebooks to reference the location of your data.

The first fix is relatively straightforward — and you’ll need to apply it regardless of your setup. Interestingly, we found that even if none of the notebooks (not even the orchestrator one) is connected to a Lakehouse or data source, this flag is still required.

If you happen to know why this is needed, feel free to comment or reach out — I’d love to understand more about the reasoning behind it.

As for the second fix:

According to the related MS documentation:

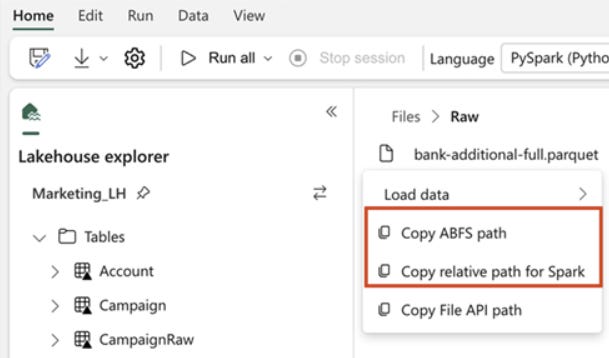

To specify the location to read from, you can use the relative path if the data is from the default lakehouse of your current notebook. Or, if the data is from a different lakehouse, you can use the absolute Azure Blob File System (ABFS) path. Copy this path from the context menu of the data.

A typical absolute path looks like this: abfss://GUID-of-your-FabricWorkspace0c@onelake.dfs.fabric.microsoft.com/GUID-of-your-Lakehouse/Tables/YourSchema/YourTableUse

You can simplify this by defining parts of the path as variables in your notebook — especially if your tenant does not have the Environment Variables (Preview) feature enabled:

workspace_id = "your_workspace_id"

lakehouse_id = "your_lakehouse_id"

table_path = "Tables/Schema/TableName

full_path = f"abfss://{workspace_id}@onelake.dfs.fabric.microsoft.com/{lakehouse_id}/{table_path}"

df = spark.read.format("delta").load(full_path)Additional tweaks needed

In PySpark:

- ❌ Stop using saveAsTable() and read.table() commands

- ✅ Use save() and spark.read.load("absolutepath") instead

In Spark SQL:

- ❌ Avoid the lakehouse.schema.table format like mySilverLakehouse.Schema_for_Processed_Data.FactTable

- ✅ Use: SELECT * FROM delta.`absolutepath_to_your_table

Be sure to:

Add

delta.before the absolute pathWrap the path in backticks (`absolute_path`) to ensure correct syntax

You’ll need this as it seems that Spark SQL doesn’t handle multiple identifiers well (e.g., lakehouse.schema.table). The delta. prefix with an absolute path helps Spark resolve the reference correctly and avoids ambiguity.

🧪 Bonus: Use Environment Variables (If Enabled)

If your Fabric tenant has the Environment Variables (Preview) feature enabled, you can store Lakehouse paths as environment variables and reference them directly in your code.

Benefits:

Cleaner code

Easier to manage and maintain

Switch between environments (e.g., dev, test, prod)

Before hardcoding paths, check whether your tenant has this feature enabled.

Originally, the main reason I wanted to write this article was to help others by summarizing our research and experience with using DAGs in a multi-Lakehouse environment.

But while the post was still in draft, we made further progress with the solution — and we also needed to add logging to the setup. That part turned out to be trickier than expected, especially when it came to:

Catching failures inside individual notebooks

Identifying which stage or notebook the error occurred in

I’ll share a code block at the end of this article.

Feel free to reuse it — and if you have a better logging strategy, I’d love to hear from you!

🌳 Summary

DAGs in Fabric notebooks are powerful. They give you:

More structure

Better orchestration

Greater flexibility — especially for pro-code users

Just remember:

✅ Use absolute paths when referencing Lakehouses

✅ In PySpark, use

save()andload()instead ofsaveAsTable()✅ In Spark SQL, use

delta.\absolutepath``✅ Use environment variables if possible

✅ Implement logging for long-term maintainability

If you want this to be reusable or extensible for observability, consider logging:

DAG run start time / end time

Notebook names

Execution duration

Status (success/fail)

-----Notebooks = []

try:

# Run multiple notebooks using DAG

results = notebookutils.notebook.runMultiple(dag, {"displayDAGViaGraphviz": True})

# Collect the names of all successfully executed notebooks

for activity, res in results.items():

Notebooks.append(activity)

# [Optional] Log metadata for each successful execution

# [Optional] Save success log to a Delta table or another sink

# [Optional] Notify downstream systems or trigger the next steps

except Exception as e:

Notebooks_with_errors = []

filtered_errors = {}

# Identify failed notebooks and extract corresponding error messages

for notebook, error in e.result.items():

if error["exception"]:

Notebooks_with_errors.append(notebook)

filtered_errors[notebook] = error["exception"] # ✅ Only failed notebooks

else:

Notebooks.append(notebook)

# [Optional] Log failure details: notebook names + error messages

# [Optional] Save failure log to Delta table for observability

# [Optional] Raise or propagate the error to stop orchestration or trigger alerts